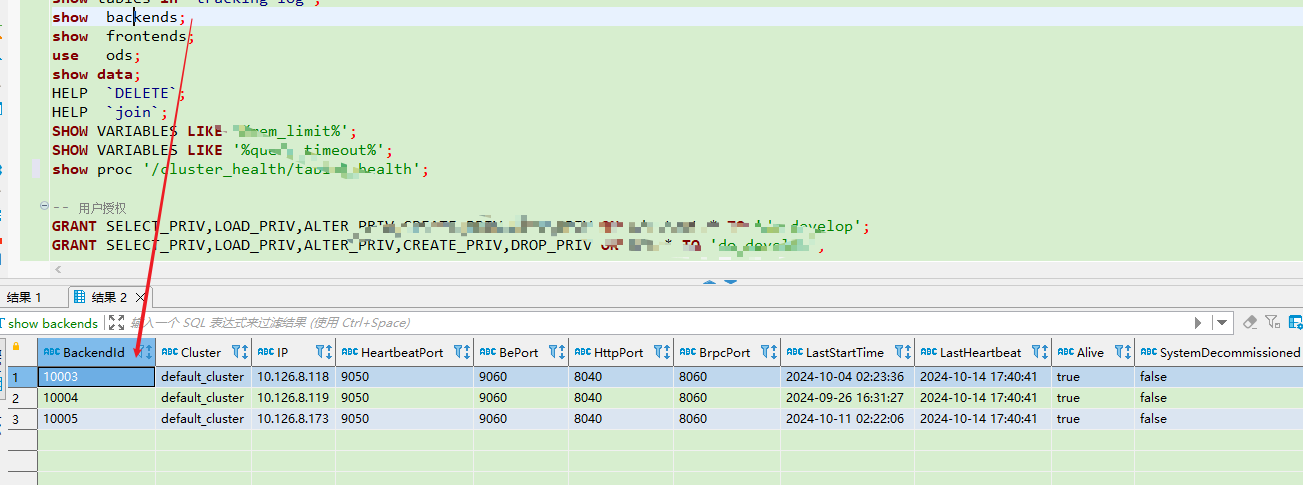

版本:spark-doris-connector-2.3_2.11-1.1.0.jar 和 doris-1.2.4-1

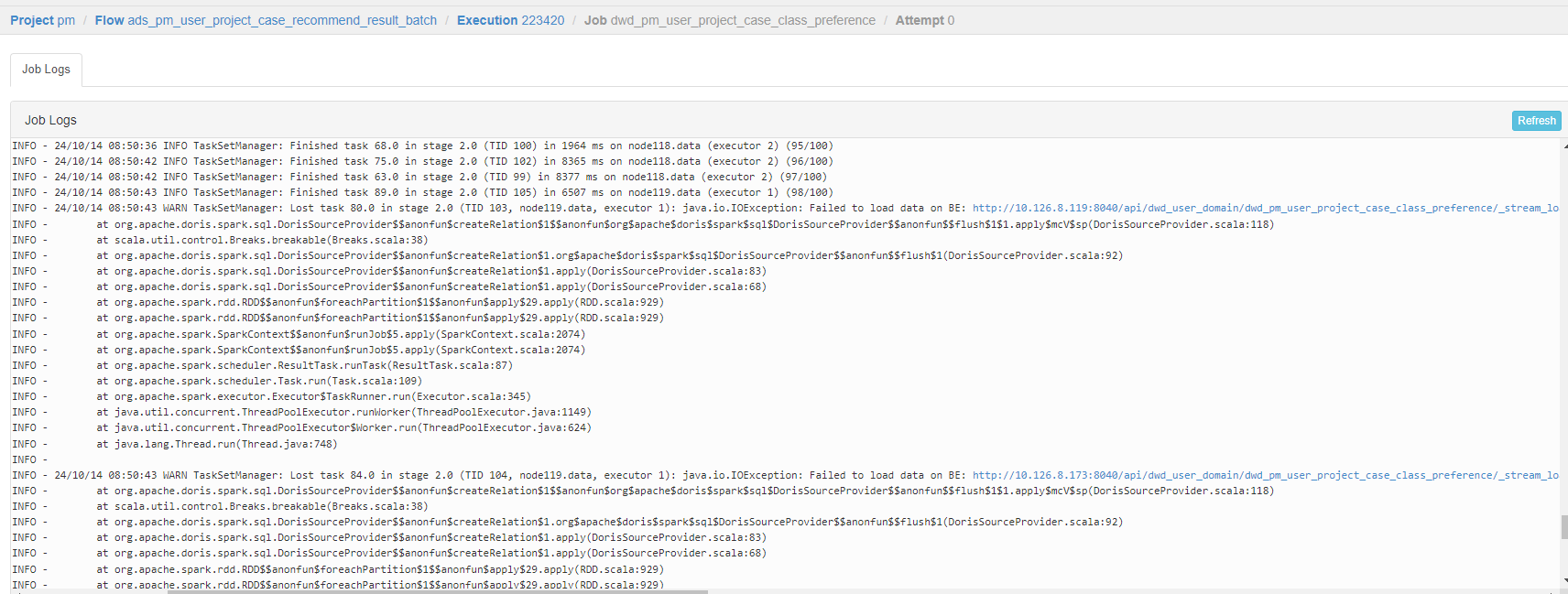

azkaban 报错:

摘取:

java.io.IOException: Failed to load data on BE: http://10.126.8.119:8040/api/dwd_user_domain/dwd_pm_user_project_case_class_preference/_stream_load? node and exceeded the max retry times.

14-10-2024 08:50:43 CST at org.apache.doris.spark.sql.DorisSourceProvider$$anonfun$createRelation$1$$anonfun$org$apache$doris$spark$sql$DorisSourceProvider$$anonfun$$flush$1$1.apply$mcV$sp(DorisSourceProvider.scala:118)

14-10-2024 08:50:43 CST at scala.util.control.Breaks.breakable(Breaks.scala:38)

14-10-2024 08:50:43 CST at org.apache.doris.spark.sql.DorisSourceProvider$$anonfun$createRelation$1.org$apache$doris$spark$sql$DorisSourceProvider$$anonfun$$flush$1(DorisSourceProvider.scala:92)

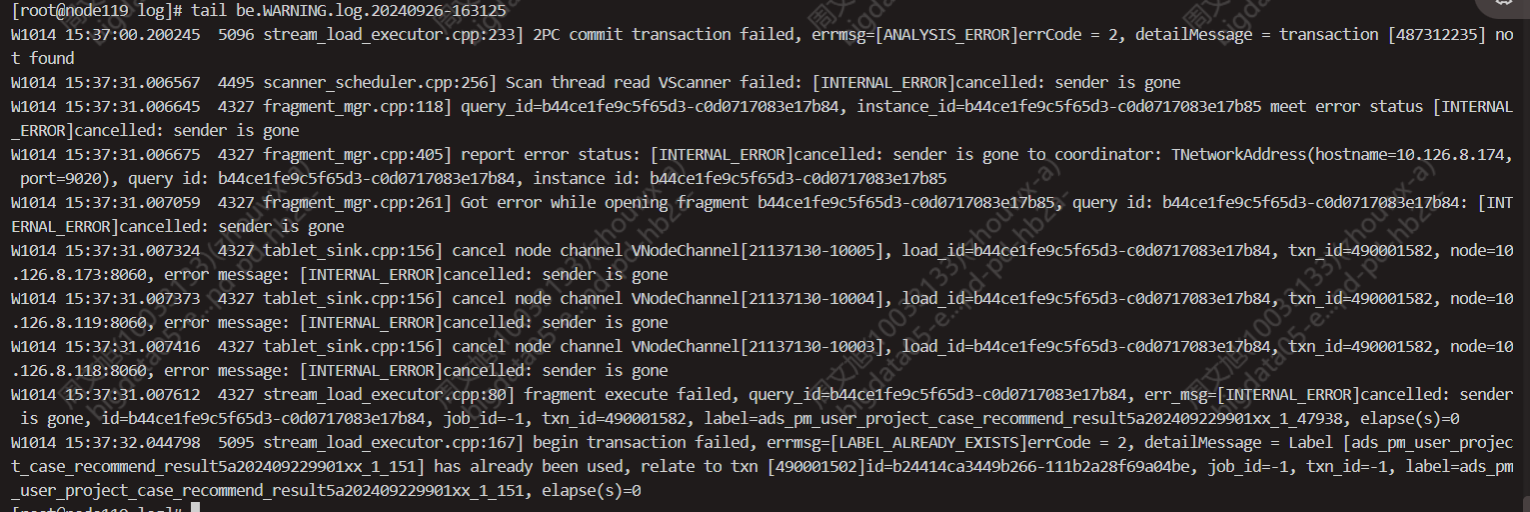

be-log异常警告:

背景:

以前任务是正常的,后来因为时钟同步矫正而重新启动BE。就会出现以上报错。(猜测跟结果集数据量大也有一定关系。)