spark version 3.3.2

doris 2.1.8

使用 24.0.0 25.0.0 版本 connector 写入doris,数据量大点非常慢(基本超时),只有少量数据(万条以内)才能正常运行。

而 用1.3.2 版本则没有这个问题。

log:

25/03/07 10:17:47 WARN SparkStringUtils: Truncated the string representation of a plan since it was too large. This behavior can be adjusted by setting 'spark.sql.debug.maxToStringFields'.

25/03/07 10:17:47 INFO CodeGenerator: Code generated in 80.001004 ms

25/03/07 10:17:47 INFO AppendDataExec: Start processing data source write support: org.apache.doris.spark.write.DorisWrite@32b0b92c. The input RDD has 1 partitions.

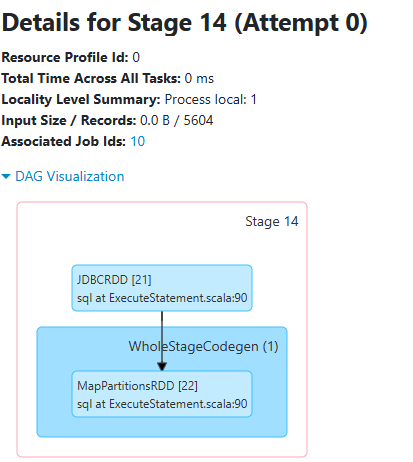

25/03/07 10:17:47 INFO SparkContext: Starting job: sql at ExecuteStatement.scala:90

25/03/07 10:17:47 INFO DAGScheduler: Got job 1 (sql at ExecuteStatement.scala:90) with 1 output partitions

25/03/07 10:17:47 INFO DAGScheduler: Final stage: ResultStage 1 (sql at ExecuteStatement.scala:90)

25/03/07 10:17:47 INFO DAGScheduler: Parents of final stage: List()

25/03/07 10:17:47 INFO DAGScheduler: Missing parents: List()

25/03/07 10:17:47 INFO DAGScheduler: Submitting ResultStage 1 (MapPartitionsRDD[4] at sql at ExecuteStatement.scala:90), which has no missing parents

25/03/07 10:17:47 INFO OperationAuditLogger: operation=240dd74c-2b5e-4c32-bfa4-2633b383b1e3 opType=ExecuteStatement state=COMPILED user=xx session=d25b8b48-4b44-41e6-8be7-da62b3aa1e98

25/03/07 10:17:47 INFO SQLOperationListener: Query [240dd74c-2b5e-4c32-bfa4-2633b383b1e3]: Job 1 started with 1 stages, 1 active jobs running

25/03/07 10:17:47 INFO SQLOperationListener: Query [240dd74c-2b5e-4c32-bfa4-2633b383b1e3]: Stage 1.0 started with 1 tasks, 1 active stages running

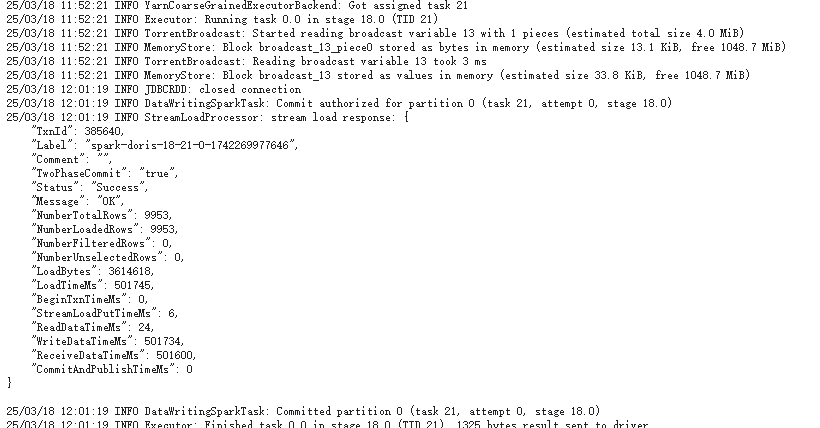

25/03/07 10:17:47 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 40.7 KiB, free 366.3 MiB)

25/03/07 10:17:47 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 15.1 KiB, free 366.2 MiB)

25/03/07 10:17:47 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on xx.com:33785 (size: 15.1 KiB, free: 366.3 MiB)

25/03/07 10:17:47 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1585

25/03/07 10:17:47 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 1 (MapPartitionsRDD[4] at sql at ExecuteStatement.scala:90) (first 15 tasks are for partitions Vector(0))

25/03/07 10:17:47 INFO YarnClusterScheduler: Adding task set 1.0 with 1 tasks resource profile 0

25/03/07 10:17:47 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 1) (xx.com, executor 1, partition 0, PROCESS_LOCAL, 8861 bytes)

25/03/07 10:17:47 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on xx.com:39295 (size: 15.1 KiB, free: 1048.8 MiB)

25/03/07 10:22:42 INFO SparkSQLSessionManager: Checking sessions timeout, current count: 1