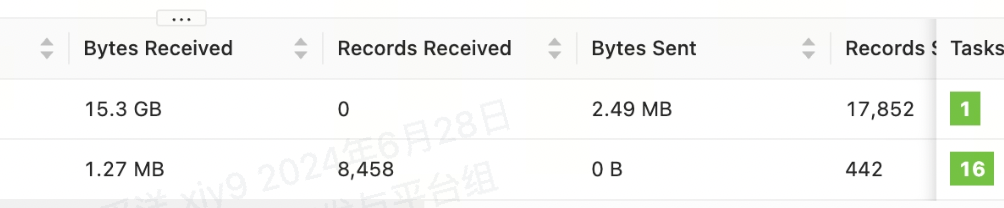

flink截图[版本1.14.5]

建表语句

CREATE TABLE `qfcx_owner_incoming_alldata` (

`guid` VARCHAR(36) NOT NULL COMMENT '主键',

`area_guid` BIGINT NOT NULL COMMENT 'GUID',

`object_guid` VARCHAR(36) NULL DEFAULT "" COMMENT 'GUID'

.

.

.

.

.

`area_name` VARCHAR(200) NULL DEFAULT "" COMMENT '名称',

) ENGINE=OLAP

UNIQUE KEY(`guid`, `area_guid`)

COMMENT 'OLAP'

DISTRIBUTED BY HASH(`area_guid`) BUCKETS 10

PROPERTIES (

"replication_allocation" = "tag.location.default: 1",

"min_load_replica_num" = "-1",

"is_being_synced" = "false",

"storage_medium" = "hdd",

"storage_format" = "V2",

"inverted_index_storage_format" = "V1",

"enable_unique_key_merge_on_write" = "true",

"light_schema_change" = "true",

"store_row_column" = "true",

"disable_auto_compaction" = "false",

"enable_single_replica_compaction" = "false",

"group_commit_interval_ms" = "2000",

"group_commit_data_bytes" = "134217728"

);

请各位大佬指点~感谢

问题描述:

1、在用flink读取kafka数据然后生成sql,采用jdbc的方法实时更新doris宽表。在sql没有攒批,单条执行的情况下,速度只有每秒100-200左右条的更新。

2、如果换成flink-doris-connector,更新速度会有提升吗,提升有多少?