2024-04-16 02:22:35,572 WARN (mysql-nio-pool-4|355) [StmtExecutor.executeByLegacy():776] execute Exception. stmt[138, b3f64e5f1ca6406a-b8976fc01a31d7ee]

org.apache.doris.common.UserException: errCode = 2, detailMessage = get file split failed for table: student, err: java.lang.IllegalArgumentException: bucket is null/empty

at org.apache.doris.planner.external.HiveScanNode.getSplits(HiveScanNode.java:205) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.planner.external.FileQueryScanNode.createScanRangeLocations(FileQueryScanNode.java:259) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.planner.external.FileQueryScanNode.doFinalize(FileQueryScanNode.java:218) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.planner.external.FileQueryScanNode.finalize(FileQueryScanNode.java:205) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.planner.OriginalPlanner.createPlanFragments(OriginalPlanner.java:200) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.planner.OriginalPlanner.plan(OriginalPlanner.java:101) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.qe.StmtExecutor.analyzeAndGenerateQueryPlan(StmtExecutor.java:1141) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.qe.StmtExecutor.analyze(StmtExecutor.java:975) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.qe.StmtExecutor.executeByLegacy(StmtExecutor.java:673) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.qe.StmtExecutor.execute(StmtExecutor.java:448) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.qe.StmtExecutor.execute(StmtExecutor.java:422) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.qe.ConnectProcessor.handleQuery(ConnectProcessor.java:435) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.qe.ConnectProcessor.dispatch(ConnectProcessor.java:583) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.qe.ConnectProcessor.processOnce(ConnectProcessor.java:834) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.mysql.ReadListener.lambda$handleEvent$0(ReadListener.java:52) ~[doris-fe.jar:1.2-SNAPSHOT]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_201]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_201]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_201]

Caused by: org.apache.doris.datasource.CacheException: failed to get files from partitions in catalog hive

at org.apache.doris.datasource.hive.HiveMetaStoreCache.getFilesByPartitions(HiveMetaStoreCache.java:548) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.datasource.hive.HiveMetaStoreCache.getFilesByPartitionsWithCache(HiveMetaStoreCache.java:512) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.planner.external.HiveScanNode.getFileSplitByPartitions(HiveScanNode.java:216) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.planner.external.HiveScanNode.getSplits(HiveScanNode.java:198) ~[doris-fe.jar:1.2-SNAPSHOT]

... 17 more

Caused by: java.util.concurrent.ExecutionException: java.util.concurrent.ExecutionException: java.lang.IllegalArgumentException: bucket is null/empty

at com.google.common.cache.LocalCache.loadAll(LocalCache.java:4131) ~[guava-32.1.2-jre.jar:?]

at com.google.common.cache.LocalCache.getAll(LocalCache.java:4082) ~[guava-32.1.2-jre.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.getAll(LocalCache.java:5028) ~[guava-32.1.2-jre.jar:?]

at org.apache.doris.datasource.hive.HiveMetaStoreCache.getFilesByPartitions(HiveMetaStoreCache.java:535) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.datasource.hive.HiveMetaStoreCache.getFilesByPartitionsWithCache(HiveMetaStoreCache.java:512) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.planner.external.HiveScanNode.getFileSplitByPartitions(HiveScanNode.java:216) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.planner.external.HiveScanNode.getSplits(HiveScanNode.java:198) ~[doris-fe.jar:1.2-SNAPSHOT]

... 17 more

Caused by: java.util.concurrent.ExecutionException: java.lang.IllegalArgumentException: bucket is null/empty

at java.util.concurrent.FutureTask.report(FutureTask.java:122) ~[?:1.8.0_201]

at java.util.concurrent.FutureTask.get(FutureTask.java:192) ~[?:1.8.0_201]

at org.apache.doris.common.util.CacheBulkLoader.loadAll(CacheBulkLoader.java:47) ~[doris-fe.jar:1.2-SNAPSHOT]

at com.google.common.cache.LocalCache.loadAll(LocalCache.java:4119) ~[guava-32.1.2-jre.jar:?]

at com.google.common.cache.LocalCache.getAll(LocalCache.java:4082) ~[guava-32.1.2-jre.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.getAll(LocalCache.java:5028) ~[guava-32.1.2-jre.jar:?]

at org.apache.doris.datasource.hive.HiveMetaStoreCache.getFilesByPartitions(HiveMetaStoreCache.java:535) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.datasource.hive.HiveMetaStoreCache.getFilesByPartitionsWithCache(HiveMetaStoreCache.java:512) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.planner.external.HiveScanNode.getFileSplitByPartitions(HiveScanNode.java:216) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.planner.external.HiveScanNode.getSplits(HiveScanNode.java:198) ~[doris-fe.jar:1.2-SNAPSHOT]

... 17 more

Caused by: java.lang.IllegalArgumentException: bucket is null/empty

at org.apache.hadoop.thirdparty.com.google.common.base.Preconditions.checkArgument(Preconditions.java:144) ~[hive-catalog-shade-1.0.1.jar:1.0.1]

at org.apache.hadoop.fs.s3a.S3AUtils.propagateBucketOptions(S3AUtils.java:1155) ~[hadoop-aws-3.3.6.jar:?]

at org.apache.hadoop.fs.s3a.S3AFileSystem.initialize(S3AFileSystem.java:479) ~[hadoop-aws-3.3.6.jar:?]

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:3611) ~[hadoop-common-3.3.6.jar:?]

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:554) ~[hadoop-common-3.3.6.jar:?]

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:290) ~[hadoop-common-3.3.6.jar:?]

at org.apache.hadoop.mapred.JobConf.getWorkingDirectory(JobConf.java:672) ~[hadoop-mapreduce-client-core-3.3.6.jar:?]

at org.apache.hadoop.mapred.FileInputFormat.setInputPaths(FileInputFormat.java:470) ~[hadoop-mapreduce-client-core-3.3.6.jar:?]

at org.apache.hadoop.mapred.FileInputFormat.setInputPaths(FileInputFormat.java:443) ~[hadoop-mapreduce-client-core-3.3.6.jar:?]

at org.apache.doris.datasource.hive.HiveMetaStoreCache.loadFiles(HiveMetaStoreCache.java:426) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.datasource.hive.HiveMetaStoreCache.access$400(HiveMetaStoreCache.java:112) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.datasource.hive.HiveMetaStoreCache$3.load(HiveMetaStoreCache.java:210) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.datasource.hive.HiveMetaStoreCache$3.load(HiveMetaStoreCache.java:202) ~[doris-fe.jar:1.2-SNAPSHOT]

at org.apache.doris.common.util.CacheBulkLoader.lambda$null$0(CacheBulkLoader.java:42) ~[doris-fe.jar:1.2-SNAPSHOT]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_201]

... 3 more

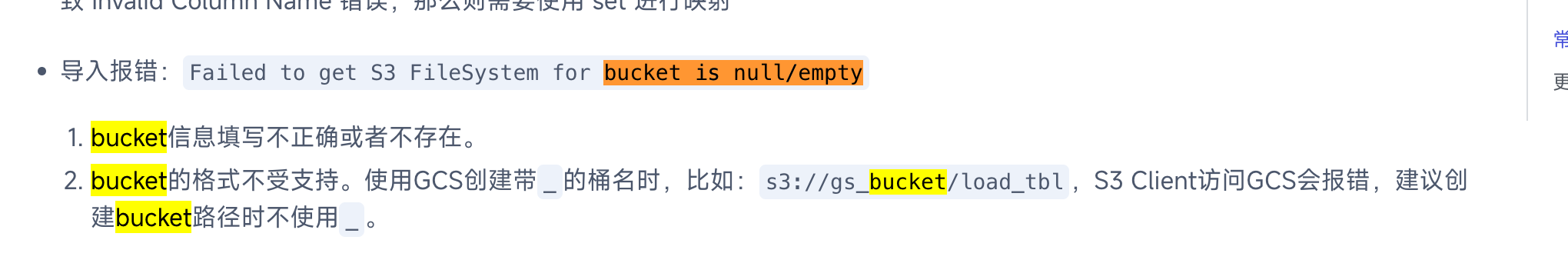

【已解决】doris select hive表报错,desc表没有问题

Viewed 79